Google BigQuery is a powerful data warehouse for analysing large-scale datasets, but as your data grows, performance optimization becomes crucial.

Below, I’ll walk you through some strategies to reduce your BigQuery cost and ensure cost-effective, high-performance data analysis.

Hint: Tips 2, 8 & 9 are low hanging fruit if you’re after quick fixes!

1. Avoid SELECT * in your Queries

In the past, using SELECT * versus specifying individual fields made little difference because the entire dataset was retrieved (and you were billed in full), then the data was filtered and presented to you.

With data warehouses like BigQuery, the columns retrieved directly impact your costs. By specifying only the fields you need, you can significantly reduce query expenses and improve performance.

Instead of:

SELECT *

FROM `project.dataset.events`Use:

SELECT event_date, event_name, user_pseudo_id

FROM `project.dataset.events`By being intentional about which fields you retrieve, you significantly reduce the amount of data scanned and improve query performance.

2. Use Approximate Counts for Large Aggregations

We don’t always need an exact number with 100% accuracy when working with large datasets. For example, instead of 10,601,340, most analyses would be just as actionable with an approximation like 10,600,000. This trade-off can lead to significant cost savings.

BigQuery offers approximation functions like HLL_COUNT_DISTINCT and APPROX_COUNT_DISTINCT, which use HyperLogLog++ for estimating distinct counts. HyperLogLog++ is a smart algorithm that estimates unique counts without having to go through all the data. It’s fast, uses less memory, and is typically accurate within a small margin of error. Its tolerance depends on the configuration but usually provides a relative error of around 1% for large datasets, making it highly reliable for most analytical purposes.

For instance, consider a GA4 dataset where you need to count unique users:

SELECT APPROX_COUNT_DISTINCT(user_pseudo_id) AS approx_user_count

FROM `project.analytics_123456.events_*`

WHERE _table_suffix BETWEEN '2024-01-01' AND '2024-01-31'This query will estimate the number of unique users for that period, reducing computational costs compared to using COUNT(DISTINCT user_pseudo_id).

3. Run Queries with Sampled Data First

I’ve watched seasoned Analysts run queries resulting in petabytes of data to only realise they missed a few fields in their original query and run it all over again.

I highly recommend using TABLESAMPLE, where you can only query a percentage of your table and when you’ve perfected your query you can simply comment out a single line.

SELECT event_date, user_pseudo_id

FROM `project.dataset.events`

TABLESAMPLE SYSTEM (1 PERCENT)

WHERE event_date = '2024-01-01'With this simple addition of TABLESAMPLE SYSTEM (1 PERCENT), your BigQuery bill for that query has reduced to 1% of the entire query.

I should caveat, this only returns 1% of the data so it’s great for ‘work in progress’ queries, not so great for production.

4. Introduce a Semantic Layer

A semantic layer is something that sits in between your dashboard and BigQuery. It allows you to define rules around caching and can provide queried data without running it again on BigQuery.

Let’s say you ran a fairly sizeable query for the last 30 days and it had terabytes of data returned. BigQuery bills you for each query, so if three other colleagues ran the exact same query, BigQuery will bill you project four times for the same query essentially.

With a semantic layer, you can introduce a caching layer that sits between your dashboards and BigQuery. You can instruct it to run once per project, and all subsequent queries are cached results which don’t get run on BigQuery. This saves you three additional executions and three additional query costs to add to your bill. [If you’re wondering how this works in practise, it sets up another “pseudo-warehouse” which you query from instead of BQ directly].

BigQuery has its own cache history, but it is done on a per user level instead of a per project level. So multiple users querying the same data don’t get any performance gain from this. Only if the same user queries the exact same data will you see a performance improvement.

Looker (not Looker Studio) has its own semantic layer called LookML, where you can add a great deal of context to your data to facilitate non-technical users in getting their answers easily.

If you’re not using Looker, there are some newer players doing some great work with semantic layers. DBT and Cube seem to be making great strides in this area.

5. Partition and Cluster Your Tables

In order for your GA4 data to truly be performant, it’s important to understand that the original GA4 data structure doesn’t actually meet best practices. Sharded data (when a new table is created for each date) doesn’t allow us to take full advantage of partitioning, which is a great optimization technique for databases like BigQuery.

To address this, I recommend rebuilding the GA4 tables into a single unified table and adding these optimizations:

- Partitioning: Partitioning organizes the table by a specific field to segment data for easier retrieval. For GA4, I typically partition on

event_date, or occasionally onevent_timestamp(hourly) for large clients. This setup optimizes querying data either daily or hourly. If you’re using a single table, ensure that every query requires a partition filter to pull data. This prevents full-table scans and keeps query costs low. - Clustering: Clustering groups similar values together within a table. At a minimum, I cluster on

user_pseudo_idandevent_name. This allows for optimized queries when analyzing particular user journeys or selecting specific events.

When creating the table, I also unnest several useful datapoints like page_location, and I add new dimensions such as landing_page and page_path. These enhancements improve query performance and provide richer insights.

6. Use Incremental Strategies

When you have a dataset being loaded into BigQuery with a third-party connector, ensure it’s only loading new data and not replacing existing data. This process, known as an incremental strategy, can have a huge impact on performance and costs.

I cannot stress enough how important this is. I’ve seen cases where petabytes of data were reloaded unnecessarily, replacing years of data, when all that was needed was yesterday’s data. By adopting an incremental approach, you save time, storage, and processing costs.

7. Pre-Aggregate Your Data

Data like GA4 isn’t optimized for analytics out of the box. Pre-aggregating frequently used metrics can drastically improve performance and reduce query costs. For example, you can create summary tables to store pre-computed metrics such as:

- Event counts (e.g., total number of events per day)

- Purchase revenue (e.g., total revenue grouped by day or campaign)

- New user counts (e.g., distinct users identified on their first visit)

- Active user counts (e.g., daily or monthly active users)

While pre-aggregation is great for metrics like these, be cautious when working with user data that depends on session counts or unique users over time. Aggregations on pre-aggregated data, such as calculating “sessions per user” for a month, may lead to inaccuracies because users can be double-counted across different time periods.

This method helps simplify queries and speed up reporting for commonly used metrics, ensuring both accuracy and cost-effectiveness.

8. Enable History-Based Optimizations

BigQuery’s history-based optimizations can improve query execution over time. Make sure this feature is enabled in your settings to allow BigQuery to leverage historical execution plans and statistics for better performance.

To activate this feature at the project level, you can use a generic template or a specific example:

Generic template:

ALTER PROJECT `PROJECT_NAME`

SET OPTIONS (

`region-LOCATION.default_query_optimizer_options` = 'adaptive=on'

);If you had a project named growth-runner in the EU region, this is what your query would look like:

ALTER PROJECT `growth-runner`

SET OPTIONS (

`region-eu.default_query_optimizer_options` = 'adaptive=on'

);This enables adaptive optimizations for your queries, improving efficiency. For more details on history-based optimizations, refer to Google Cloud’s documentation.

9. Understand Physical vs Logical Storage Billing

BigQuery charges for both physical and logical storage, but it’s important to understand the difference:

- Physical storage refers to the actual space your data occupies, including compressed data and historical snapshots (time travel).

- Logical storage is the size of the data when fully decompressed and includes all columns, even if they’re sparsely populated.

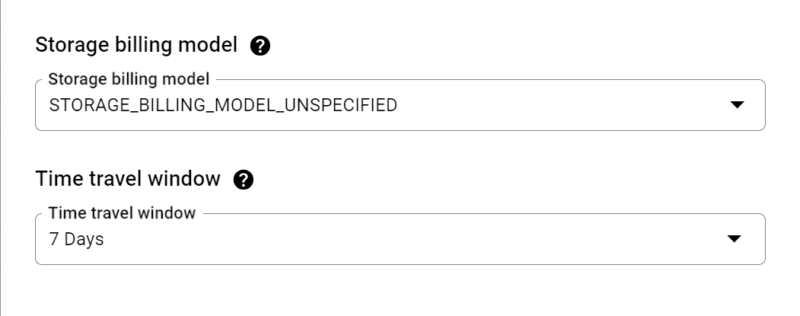

Time travel, which allows you to query previous states of a table (included accidentally deleted data), can significantly increase physical storage usage. While it’s a powerful feature, it’s worth periodically reviewing your historical snapshots and determining if they’re necessary to retain. For example, lowering the default time travel window from 7 days to 1 day can reduce physical storage costs without impacting most workflows.

Settings are managed on a dataset level, you can simply click edit and find them nested in these sections:

Being mindful of these differences helps you manage costs more effectively while leveraging BigQuery’s powerful features. For more information about time travel, refer to Google Cloud’s documentation.

10. Consider BigQuery Slots for Cost Control

If your BigQuery usage exceeds $1,500 per month, it might be time to invest in BigQuery slots. Slots provide a flat-rate pricing model, offering predictable costs and sufficient compute capacity for heavy workloads.

With slots, you purchase a dedicated amount of BigQuery processing capacity, measured in slot-hours, for a fixed monthly cost. For example:

- On-demand pricing: Queries are billed based on the amount of data processed. For large-scale analytics, this can lead to unpredictable and high costs.

- Capacity pricing: Instead, you pay a flat fee (e.g. ~$1,500 per month for 50 slots) and are limited to slots for query execution.

Example Scenario:

Let’s say your team executes many complex GA4 queries daily processing hundreds of terabytes of data. With on-demand pricing, your monthly costs could exceed $2,000. But, by purchasing 50 slots for ~$1,500 per month you now have a predictable spend per month.

Please note that with slots, queries might take slightly longer to execute during peak times as capacity is shared across all users.

To learn more about BigQuery slots and determine the right capacity for your needs, refer to BigQuery Slot Pricing Documentation.

Final Thoughts

Optimizing GA4 data analysis with BigQuery requires a mix of technical best practices and strategic planning. By adopting these techniques, you can significantly improve query performance, reduce costs, and unlock deeper insights from your data.

It goes without saying, if you need any support with this feel free to get in touch with us, we’d love to help.

If this was useful please leave a comment below as I’d love to hear back from you!

I don’t see any physical storage (0). It’s normal?

Hey João! I’ve quickly created some instructions for you, let me know if this worked for you?

https://scribehow.com/shared/Change_your_Dataset_Billing_Model_in_Google_BigQuery__QeTA0rcTRbCAa8y7JIMiDw